Manga Image Translator is a powerful open – source tool for translating text in manga images. Here is a detailed introduction:

Feature Highlights

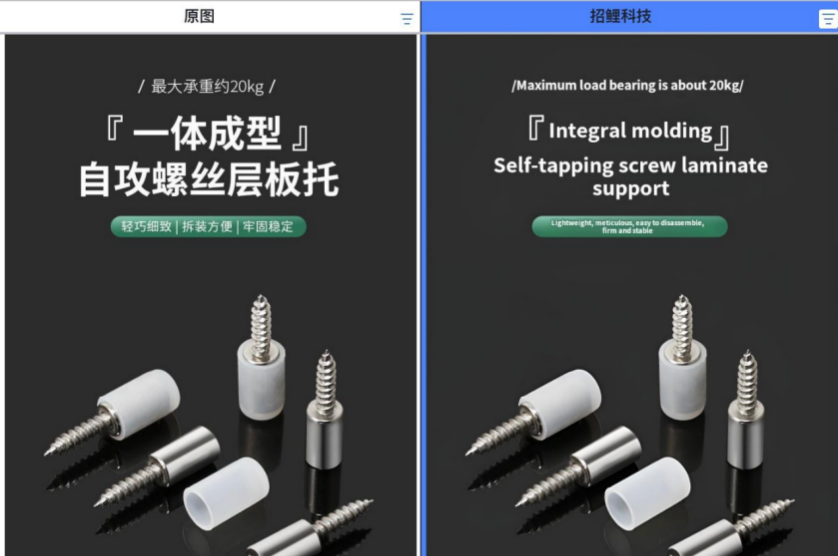

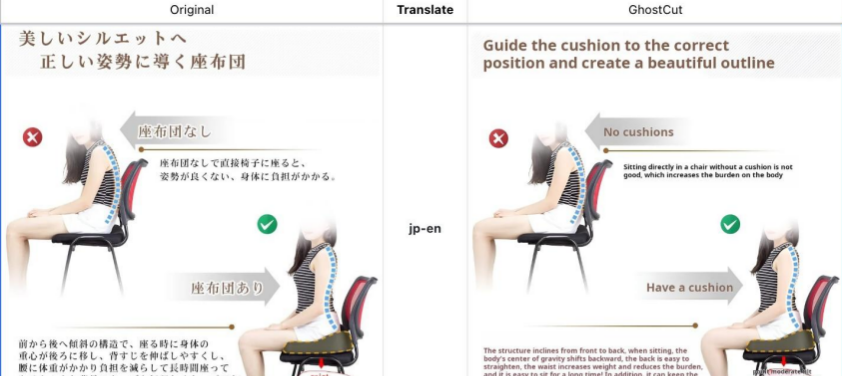

– Automatic Text Translation: By leveraging advanced OCR technology, it can quickly and accurately recognize the text in manga and other images, and automatically translate it into the specified language.

– Multilingual Support: Initially mainly used for translating Japanese text, it now supports multiple languages such as Chinese, English, Korean, etc.

– Text Restoration and Coloring: It can restore and color the areas where the original text has been removed, maintaining the overall aesthetic of the image.

– Text Rendering: The translated text is rendered according to the original image style, integrating naturally into the picture and providing a good visual effect.

– Multiple Operating Interfaces: It supports the command – line interface for batch – processing image translation, and also has a web interface for convenient single – image translation and preview.

– One – click Text Removal and Multiple Service Support: It can remove the text in the manga with one click and also supports multiple translation services or models.

Technical Principles

– Text Recognition: First, pre – process the input image, such as grayscaling, denoising, and binarization. Then, use a Convolutional Neural Network (CNN) to extract text feature vectors. Finally, decode the text content through a Recurrent Neural Network (RNN) or an attention mechanism.

– Translation: It adopts a pre – trained translation model based on the Transformer architecture. Through training with a large amount of parallel corpora, it learns the mapping relationship between languages, and generates the target – language text from the recognized source – language text.

– Text Rendering and Restoration: For text rendering, it selects an appropriate font color based on the image style and original text information to render the translated text, and then uses image synthesis technology to integrate it. For text restoration, it uses a Generative Adversarial Network (GAN) or a Convolutional Neural Network (CNN) based on deep learning to fill and repair the areas where the text has been removed.

Ways to Experience

– Official Demonstration Website: Visit https://cotrans.touhou.ai/, where you can upload images, select translation parameters, and obtain translation results. The interface is simple and easy to operate.

– Browser Script: Install the script from https://greasyfork.org/scripts/437569 into a compatible browser. When encountering an image that needs translation while browsing the web, click the button to start the translation.

– Local Deployment: Ensure that the Python version is not lower than 3.8. For Windows systems, Microsoft C++ Build Tools need to be installed first. After cloning the project, create and activate a virtual environment, install dependencies, and use it by executing relevant commands in the command line.

You can find AI in the toolbox