This time, the spotlight should be on Google.

Last night, Google released Gemini 2.0 to everyone – the “most powerful” artificial intelligence model suite in Google’s history so far.

Google Gemini 2.0 is Open to All

In December last year, Google released an experimental version of Gemini 2.0 Flash, officially ushering in a new era of agent – based AI. Gemini 2.0 Flash is an efficient main model designed for developers, with advantages such as low latency and high performance. Earlier this year, Google updated the 2.0 Flash Thinking Experimental in Google AI Studio, further improving its performance by combining the amazing speed of the Flash model with the reasoning ability for complex problems.

Last week, Google released an updated version of 2.0 Flash to all Gemini application users on desktop and mobile devices, hoping to help more people use Gemini in new ways for creation, interaction, and collaboration.

Now, Google will release the updated Gemini 2.0 Flash to the public through the Gemini API in Google AI Studio and Vertex AI. Developers can now use the 2.0 Flash model to build production – level applications.

Google also released an experimental version of Gemini 2.0 Pro, which is the most powerful large – model in terms of coding performance and handling complex prompts in Google’s portfolio so far. In addition to being used in Google AI Studio and Vertex AI, Gemini 2.0 Pro will also be available to Gemini Advanced users in the Gemini application.

In addition, Google will publicly preview Gemini 2.0 Flash – Lite, the most cost – effective model solution so far, in Google AI Studio and Vertex AI.

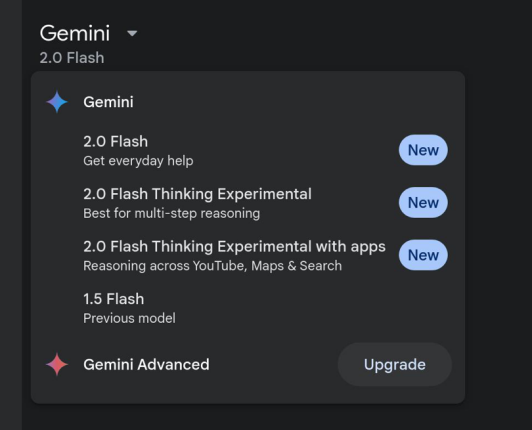

Finally, 2.0 Flash Thinking Experimental will be added to the model drop – down menu on desktop and mobile devices for Gemini application users to use at any time. All the released models mentioned above will support multimodal input with text output, and more modalities will be supported in the general version in the next few months.

2.0 Flash: An Update for All Users

The Flash series of models first debuted at the I/O 2024 conference and was widely welcomed by developers as a powerful main model. Gemini 2.0 Flash offers comprehensive features, including native tool use, a 1 – million – token context window, and multimodal input. It currently supports text output, has image and audio output capabilities, and plans to fully launch the Multimodal Live API in the next few months.

2.0 Flash has now been officially released to more users in Google AI products, and its performance on key benchmarks has also been improved. Features such as image generation and text – to – speech will be launched soon.

Interested users can immediately experience Gemini 2.0 through the Gemini application, or the Gemini API in Google AI Studio and Vertex AI.

2.0 Pro Experimental: Google’s Best – Performing Coding Model

During the sharing of early experimental versions of Gemini 2.0 (such as Gemini – Exp – 1206), Google received excellent feedback from developers on its advantages and best use cases (such as coding scenarios).

In response to these feedbacks, Google has released an experimental version of Gemini 2.0 Pro. Compared with various large – models released before, Gemini 2.0 Pro Experimental has the most powerful coding performance and the ability to handle complex prompts, and can better understand and reason about world knowledge. The model is equipped with Google’s largest context window, which can accommodate 2 million tokens, enabling it to comprehensively analyze and understand a large amount of information, and can call other tools such as Google Search and code execution.

Gemini 2.0 Pro is now available as an experimental model to developers in Google AI Studio and Vertex AI, as well as Gemini Advanced users. These users can immediately experience it through the model drop – down menu on desktop and mobile devices.

2.0 Flash – Lite: Google’s Most Cost – Effective Model

Google said that it had received a lot of positive feedback on the price and running speed of the 1.5 Flash model, and the company has been constantly striving to improve the model quality while maintaining the cost and speed levels. The newly launched 2.0 Flash – Lite is a new model with better quality than 1.5 Flash, and continues to maintain the speed and cost advantages of the latter. The 2.0 version outperforms 1.5 Flash in most benchmarks.

Like 2.0 Flash, the 2.0 Flash – Lite model has a context window that can accommodate 1 million tokens and supports multimodal input. For example, it can generate single – line captions for approximately 40,000 different photos at once, and this operation costs less than $1 in the Google AI Studio paid package.

Gemini 2.0 Flash – Lite has been available for public preview in Google Ai Studio and Vertex AI.

What Do Users Think?

Jeff Dean, Google’s chief scientist and AI guru, praised the programming ability of Gemini 2.0 Pro. He posted on X that he was surprised by the programming ability of Gemini 2.0 Pro. He said, “I love the Boggle game (a word – filling game). This demo shows the coding ability of our Gemini 2.0 Pro model in AI Studio. It’s incredible that it can write complete code, including all the correct data structures and search algorithms, to find all valid words on the Boggle game board with a relatively simple prompt. As a computer scientist, I’m also happy that it got the data structure right on the first try.” He also humorously used “Discombobulating!” to describe it.

The full release of Gemini 2.0 has attracted widespread attention from netizens. Lin Jian (Keyboard), an author of a Geek Time column under InfoQ, immediately accessed the API for a trial after learning that Gemini 2.0 Flash was launched. He posted on X that Gemini 2.0 Flash outperforms DeepSeek V3 and GPT 4o – mini in terms of long – text, cost, and throughput.

Especially when compared with DeepSeek V3, the advantages are obvious (roughly calculated according to the background data, not counting cached tokens). The cost of Gemini 2.0 Flash is 6 times lower than that of DeepSeek V3, the output speed is 60 times faster, the context is 16 times longer, and more importantly, it natively supports all modalities.

Some X users also ran several benchmark tests to compare the performance of o3 – mini – high, Gemini 2.0 Flash, and Gemini 2.0 Pro.

In the comprehensive performance, 2.0 Pro in the Gemini family ranked first in all categories, 2.0 Flash ranked third, and 2.0 Flash Lite squeezed into the top ten with a lower cost.

Although Gemini – series models have defeated similar models in many benchmark tests, products derived from Gemini have been heavily criticized by users.

The primary reason I don’t use Google Gemini is that they truncate the input text. So I can’t simply paste long documents or other types of content as raw text into the prompt box.

I can’t even upload documents in Gemini, only pictures. On Hacker News, a user named heavyarms said:

“The last time I used Gemini (just a few days ago), I still found that there was only an ‘Upload Image’ option… And I’ve been playing with Gemini off and on for several months, but I’ve never actually uploaded a picture. This is basically my view of most current Google products: immature, flawed, confusing, and unintuitive.”

Moreover, the various version usage restrictions of these Google models are also confusing. Some users complained:

“To put it simply, I spent an hour today trying to figure out how to use the ‘Deep Research’ function, but I still couldn’t figure it out. I bought the Business Office Standard Edition of Gemini Advance, but I’m not sure if I still need a VPN, pay extra for AI products, or upgrade to a more advanced office package. Google’s product line is too complex, with various functions intertwined, making people confused. I’m starting to wonder if Google is really reliable as an AI provider.”

Google’s API has also been widely criticized by users.

Using the Google API usually makes people frustrated. In fact, I like the best basic cloud services they offer, but their additional APIs are messy. Among these AI – related APIs, Google’s API is the worst.

The Next Step for Large – Models: Approaching Human – Level Capabilities in All Aspects

Whether in terms of the deployment and usage cost of large – models or their performance, the next goal of large – models is clear: to make AI’s capabilities infinitely close to human – level. It sounds sci – fi, but it’s already on the way.

In a blog post in December, Google wrote: “Over the past year, we’ve been investing in developing more agent models, which means they can learn more about the world around you, think several steps ahead, and act on your behalf under your supervision.” It also added that Gemini 2.0 has “made new progress in multimodality – such as native image and audio output – and native tool use”, and this model series “will enable us to build new AI agents, bringing us closer to the vision of a general – purpose assistant.”

Anthropic, an AI startup backed by Amazon and founded by a former OpenAI research director, is a key player in the race to develop AI agents. In October, Anthropic said its AI agent can use a computer to complete complex tasks like humans. The startup said that Anthropic’s computer – using ability enables its technology to interpret the content on a computer screen, select buttons, enter text, browse websites, and execute tasks through any software and real – time internet browsing.

Jared Kaplan, Anthropic’s chief scientist, said in an interview with CNBC at the time that the tool “can basically use a computer like we do.” He said it can complete tasks “in dozens or even hundreds of steps.”

OpenAI recently released a similar feature called Operator, which can automatically perform tasks such as planning a vacation, filling out forms, booking a restaurant, and ordering groceries. OpenAI describes Operator as “an agent that can go online and perform tasks for you.”

Earlier this week, OpenAI launched Deep Research, which allows an AI agent to write complex research reports and analyze questions and topics selected by users. Google launched a similar tool with the same name – Deep Research – in December last year, which acts as “a research assistant that explores complex topics and writes reports on your behalf.”

CNBC first reported in December that Google would launch a number of AI features in early 2025.

“Historically, you don’t always have to be the first, but you have to be highly executable and truly be the best among similar products,” CEO Sundar Pichai said at a strategic meeting at the time. “I think that’s what 2025 is all about.”

Slug

google – gemini – 2 – 0 – release – outperforms – deepseek – v3 – features – user – feedback

Meta Description

Google releases Gemini 2.0, a powerful AI model suite. It outperforms DeepSeek V3 in cost, speed, and context. Learn about its features like 2.0 Flash, 2.0 Pro, and 2.0 Flash – Lite, plus user feedback and the future of large – models.