Dify is an open – source platform for building AI applications. It integrates the concepts of Backend as Service and LLMOps. It supports various large – language models such as Claude3 and OpenAI, and cooperates with multiple model providers to ensure that developers can select the most suitable model according to their needs. Dify significantly reduces the complexity of AI application development by providing powerful dataset management functions, visual prompt orchestration, and application operation tools.

I. Dify

What is Dify (Define & Modify)? Dify is an open – source large – language model (LLM) application development platform designed to simplify and accelerate the creation and deployment of generative AI applications. The platform combines the concepts of Backend as Service (BaaS) and LLMOps, providing developers with a user – friendly interface and a series of powerful tools, enabling them to quickly build production – level AI applications.

Low – code/No – code development: Dify allows developers to easily define prompts, contexts, and plugins in a visual way without delving into underlying technical details.

Modular design: Dify adopts a modular design, where each module has clear functions and interfaces. Developers can selectively use these modules to build their AI applications according to their needs.

Rich functional components: The platform provides rich functional components including AI workflows, RAG pipelines, Agents, and model management, assisting developers throughout the process from prototyping to production.

Support for multiple large – language models: Dify already supports mainstream models, enabling developers to choose the most suitable model to build their AI applications according to their requirements.

Dify offers four types of applications built based on LLM, which can be optimized and customized for different application scenarios and needs.

Chat Assistant: A dialogue assistant based on LLM that can interact with users in natural language, understand users’ questions, requests, or instructions, and provide corresponding answers or perform corresponding operations.

Text Generation: Focuses on various text – generation tasks, such as creative writing like writing stories, news reports, copywriting, and poems, as well as text classification, translation, and other tasks.

Agent (Intelligent Agent): This type of assistant not only has dialogue capabilities but also advanced capabilities such as task decomposition, reasoning, and tool – calling. It can understand complex instructions, break tasks into multiple subtasks, and call corresponding tools or APIs to complete these subtasks.

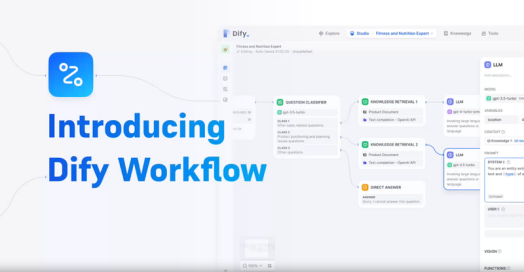

Workflow: Flexibly organizes and controls the workflow of LLM according to the process orchestration defined by the user. Users can customize a series of operation steps and logical judgments, enabling LLM to execute tasks according to the预定流程.

II. Dify + RAG

How to upload documents to the Dify knowledge base to build RAG? The process of uploading documents to the Dify knowledge base involves multiple steps, from file selection, pre – processing, index mode selection to retrieval settings, aiming to build an efficient and intelligent knowledge retrieval system.

1. Create a knowledge base: Click on “Knowledge” in the main navigation bar of Dify. On this page, you can view existing knowledge bases.

• Create a new knowledge base: Drag and drop or select the files to be uploaded. Batch uploads are supported, but the quantity is limited by the subscription plan.

• Empty knowledge base option: If you haven’t prepared the documents yet, you can choose to create an empty knowledge base.

• External data sources: When using external data sources (such as Notion or website synchronization), the knowledge base type will be fixed. It is recommended to create a separate knowledge base for each data source.

2. Text pre – processing and cleaning: After the content is uploaded to the knowledge base, it needs to be chunked and data – cleaned. This stage can be regarded as the pre – processing and structuring of the content.

• Automatic mode: Dify automatically splits and cleans the content, simplifying the document preparation process.

• Custom mode: For cases where more fine – grained control is required, you can choose the custom mode for manual adjustment.

3. Index mode: Select the appropriate index mode according to the application scenario, such as high – quality mode, economy mode, or question – answer mode.

• High – quality mode: Uses the Embedding model to convert text into numerical vectors, supporting vector retrieval, full – text retrieval, and hybrid retrieval.

• Economy mode: Adopts an offline vector engine and keyword index. Although the accuracy is reduced, it saves additional token consumption and related costs.

• Question – answer mode: The system performs text tokenization and generates QA question – answer pairs for each segment through summarization.

4. Retrieval settings:

• In the high – quality index mode, Dify provides three retrieval settings: vector search, full – text search, and hybrid search.

• Vector search: Vectorizes the query, calculates the distance from the text vectors in the knowledge base, and identifies the closest text chunks.

• Full – text search: Searches based on keyword matching.

• Hybrid search: Combines the advantages of vector search and full – text search.

• Rerank model: Semantically re – ranks the retrieval results to optimize the ranking results.

• In the economy index mode, Dify provides a single retrieval setting: inverted index and TopK.

• Inverted index: An index structure designed for quickly retrieving keywords in documents.

• TopK and score threshold: Set the number of retrieval results and the similarity threshold.

III. Dify + Agent

How to build an Agent on the Dify platform? On the Dify platform, an Agent can be created and deployed by selecting a model, writing prompts, adding tools and knowledge bases, configuring the inference mode and dialogue starter, and finally debugging, previewing, and publishing it as a Webapp.

1. Explore and integrate application templates

The Dify platform provides a rich “Explore” section that contains multiple application templates for agent assistants. Users can directly integrate these templates into their workspaces and start using them quickly. At the same time, it also allows users to create custom agent assistants to meet specific personal or organizational needs.

2. Select the inference model

The task – completion ability of the agent assistant largely depends on the inference ability of the selected LLM model. It is recommended to use more powerful model series such as GPT – 4 to obtain more stable and accurate task – completion results.

3. Write prompts and set the process

In the “Instructions” section, users can elaborate on the task objectives, work processes, required resources, and limiting conditions of the agent assistant. This information will help the agent assistant better understand and execute tasks.

4. Add tools and knowledge bases

• Tool integration: In the “Tools” section, users can add various built – in or custom tools to enhance the functionality of the agent assistant. These tools can include internet search, scientific computing, image creation, etc., enabling the agent assistant to interact more richly with the real world.

• Knowledge base: In the “Context” section, users can integrate knowledge base tools to provide the agent assistant with external background knowledge and information retrieval capabilities.

5. Inference mode setting

Dify supports two inference modes: Function Calling and ReAct.

• Function Calling: For models that support this mode (such as GPT – 3.5, GPT – 4), it is recommended to use this mode for better and more stable performance.

• ReAct: For model series that do not support Function Calling, Dify provides the ReAct inference framework as an alternative to achieve similar functions.

6. Configure the dialogue starter

Users can set the dialogue opening remarks and initial questions for the agent assistant, so that when users first interact with the agent assistant, it can display the types of tasks it can perform and examples of questions that can be asked.

7. Debugging and previewing

Before publishing the agent assistant as an application, users can debug and preview it on the Dify platform to evaluate its effectiveness and accuracy in completing tasks.

8. Application publishing

Once the agent assistant is configured and debugged, users can publish it as a Web application (Webapp) for more people to use. This enables the functions and services of the agent assistant to be provided to a wider user group across platforms and devices.